Research Interests

The Culham Lab is interested in how vision is used for perception and to guide actions in human observers. We use several techniques from cognitive neuroscience to understand human vision. Functional magnetic resonance imaging (fMRI) is a safe way to study which regions of the brain are active when people view different visual stimuli or use visual information to perform different tasks. Behavioral testing tells us about the visual experiences and limits of normal subjects. Neuropsychological testing tells us how these abilities are compromised in patients with damage to different areas of the brain. Neurostimulation reveals how perturbations of brain processing affect behavior.

The main theme of the the lab is an approach we term Immersive Neuroscience. We believe that although reductionism has been an invaluable tool for first-generation cognitive neuroscience, the field can now benefit from a complementary approach the brings our research closer to the real world. Over two decades, we have successfully studied actions — grasping, reaching, tool use — and visual perception using real actions and real objects. In many cases, we have identified differences in behavior and brain processing between real stimuli vs. common proxies (especially real, tangible objects vs. images) and real tasks vs. common proxies (actions with full feedback vs. open-loop pantomimed, imagined or observed actions which do not have complete feedback loops). Now we are extending these approaches to study a broader range of cognitive functions.

We take two strategies.

One strategy use behavioral approaches and neuroscience techniques like optical neuroimaging to study real-world perception and action Functional Near-Infrared Spectroscopy (fNIRS) is a non-invasive technique to measure human brain activity indirectly by recording how light of different wavelengths is modulated by blood oxygenation as the light passes through the superficial brain. While fNIRS shares some similarities with fMRI, it is much less sensitive to body movements and thus enables the study of a more natural range of real-world actions.

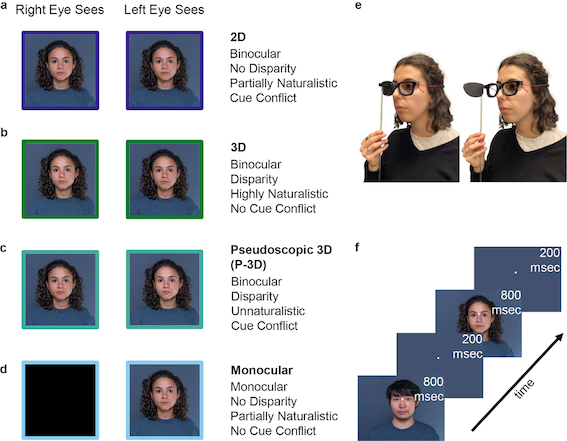

Although real-world environments may be the gold standard, many approaches, particularly fMRI, have methodological advantages (e.g., high spatial resolution) but also have constraints that make it challenging or sometimes impossible to study the full gamut of stimuli and tasks. Here we are investigating the utility of Simulated Realities We are now using 3D stimuli (rendered with stereo vision), virtual reality, and virtual gaming environments to develop naturalistic scenarios that provide greater experimental control and convenience than true reality.

Recent and current research questions in our lab include the following:

How does the processing of real-world stimuli and actions differ from that for commonly used proxies such as pictures and pantomimed, imagined or observed actions?

Which aspects of reality and virtual/augmented reality are critical for naturalistic behavior and brain responses? To what degree does realism depend upon 3D vision, actability, and awareness of realism (presence)?

Can the added engagement in VR and gaming environments tap into a diverse, robust range of cognitive functions? How is brain processing for motor and cognitive processing affected by “closed-loop” processing (with predictions and feedback) vs. “open-loop” processing (classical stimulus-response relationships without full feedback loops)?

What can we learn about human vision from studying more realistic and complex stimuli and actions than typical? For example, how does the brain represent real-world size and distance?

Using optical neuroimaging, what can we learn about the neural basis of key actions in the action repertoire of primates (e.g., grasping and manipulation, feeding, defensive actions, locomotion) and especially humans (e.g., tool use, gestures, social interactions)?

How feasible is optical neuroimaging as a tool for non-invasive recording of brain states and the development of brain-computer interfaces?

Which brain areas are involved in various aspects of grasping and reaching tasks? How do these areas in the human brain relate to areas of the macaque monkey brain (based on reports from other labs)? What types of visual and motor information do these areas encode? How are these areas and networks modulated by experiences such as tool use?

How do vision-for-perception and vision-for-action rely on different brain areas? How are these interactions affected when the nature of the task is changed, for example by asking subjects to perform pretend (pantomimed) or delayed actions?

How is behavior, brain activation, and brain connectivity affected by focal brain lesions in interesting single case studies, including patients who cannot recognize objects but can act toward them?

Former honours student and current research assistant Sofia Varon got her first publication: Target interception in virtual reality is better for natural than for unnatural trajectories